ICCV 2025 | Mitigating Object Hallucinations via Sentence-Level Early Intervention

Shangpin Peng*1, Senqiao Yang*2, Li Jiang3, Zhuotao Tian1✉️

1Harbin Institute of Technology, Shenzhen

2The Chinese University of Hong Kong

3The Chinese University of Hong Kong, Shenzhen

* Equal contribution

✉️ Corresponding author: tianzhuotao@hit.edu.cn

🎊 News

Section titled “🎊 News ”- [2025.07.30] 🔍 Our work has been featured and explained by 52CV, check it out here.

- [2025.07.21] 📖 All code, data, and models are released!

- [2025.06.26] 🎉 Our SENTINEL is accepted by ICCV 2025!

🚀 Overview

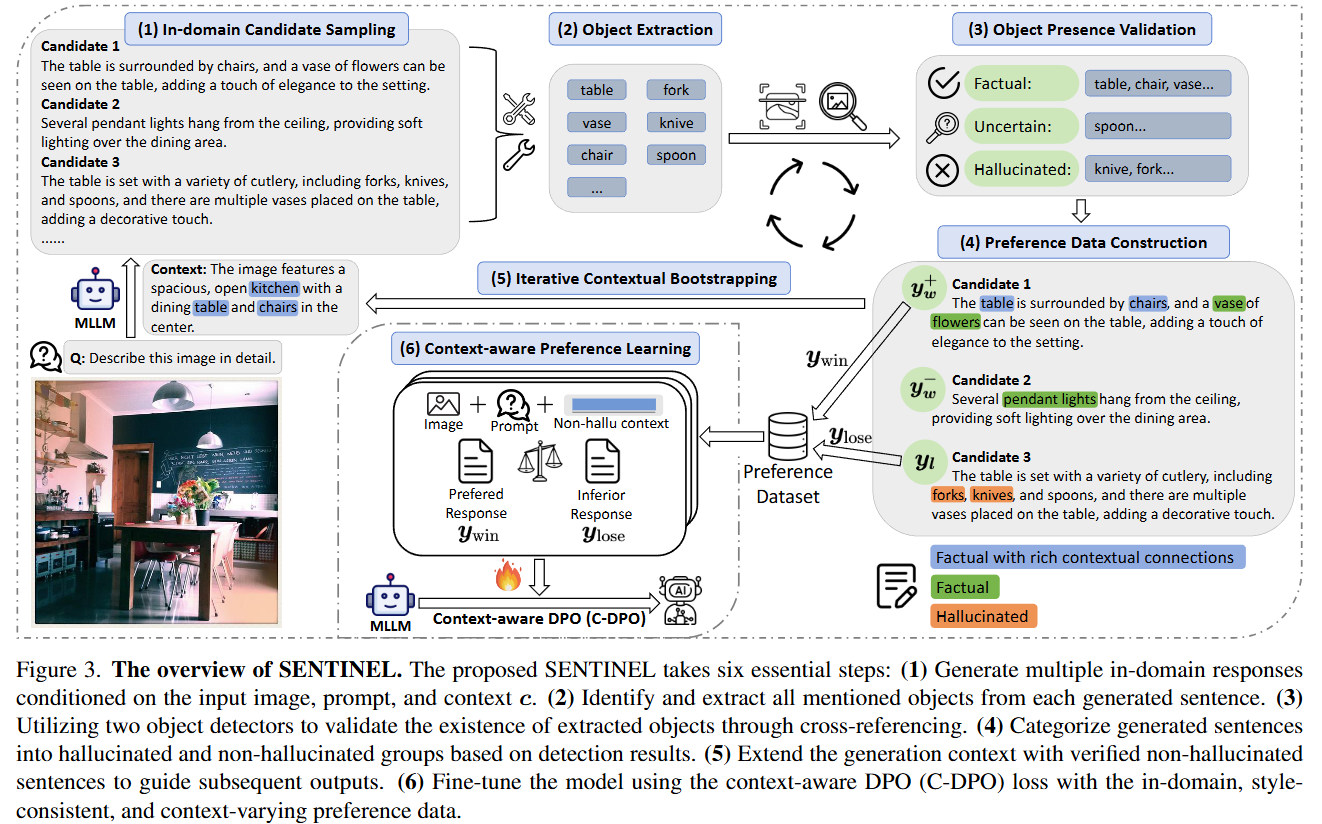

Section titled “🚀 Overview ”SENTINEL introduces an automatic, sentence‑level early intervention strategy to prevent and mitigate object hallucinations in multimodal large language models (MLLMs). Key advantages:

-

Annotation‑free: No human labeling required.

-

Model-agnostic: Compatible with any MLLM architecture.

-

Efficient: Lightweight LoRA fine‑tuning.

🔑 Key Features

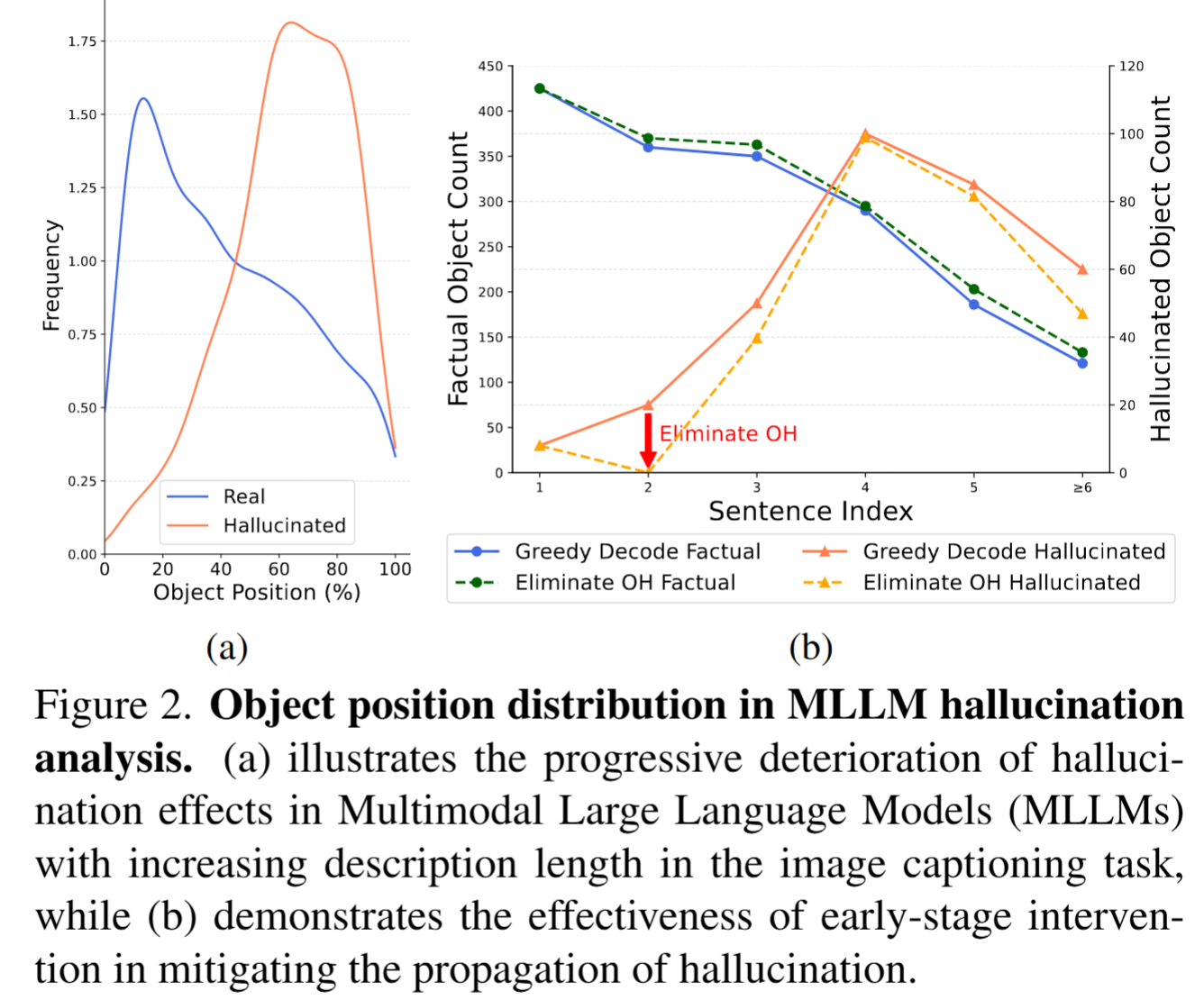

Section titled “🔑 Key Features”- Early intervention halts hallucination propagation. We find that hallucinations of MLLMs predominantly arise in early sentences and propagate through the rest of the output. SENTINEL interrupts this chain early to maximize mitigation.

- In-domain contextual preference learning without human labels. SENTINEL constructs hallucinated/factual samples via detector cross-validation and builds context-aware preference data without relying on proprietary LLMs or manual annotations.

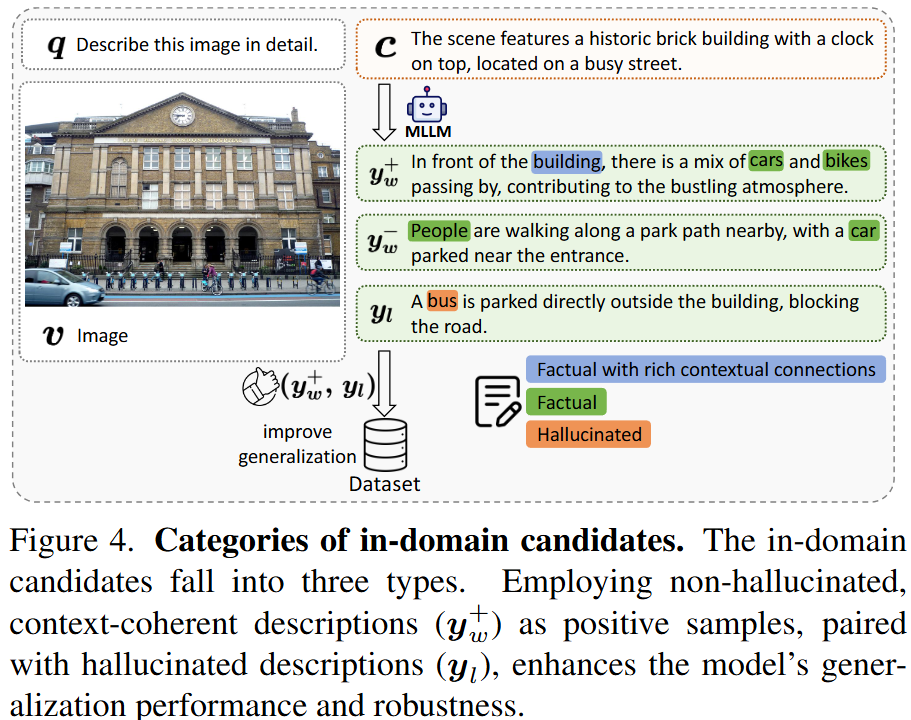

- Context matters: rich coherence drives robustness. By prioritizing context-coherent positive samples over hallucinated ones, SENTINEL significantly boosts generalization.

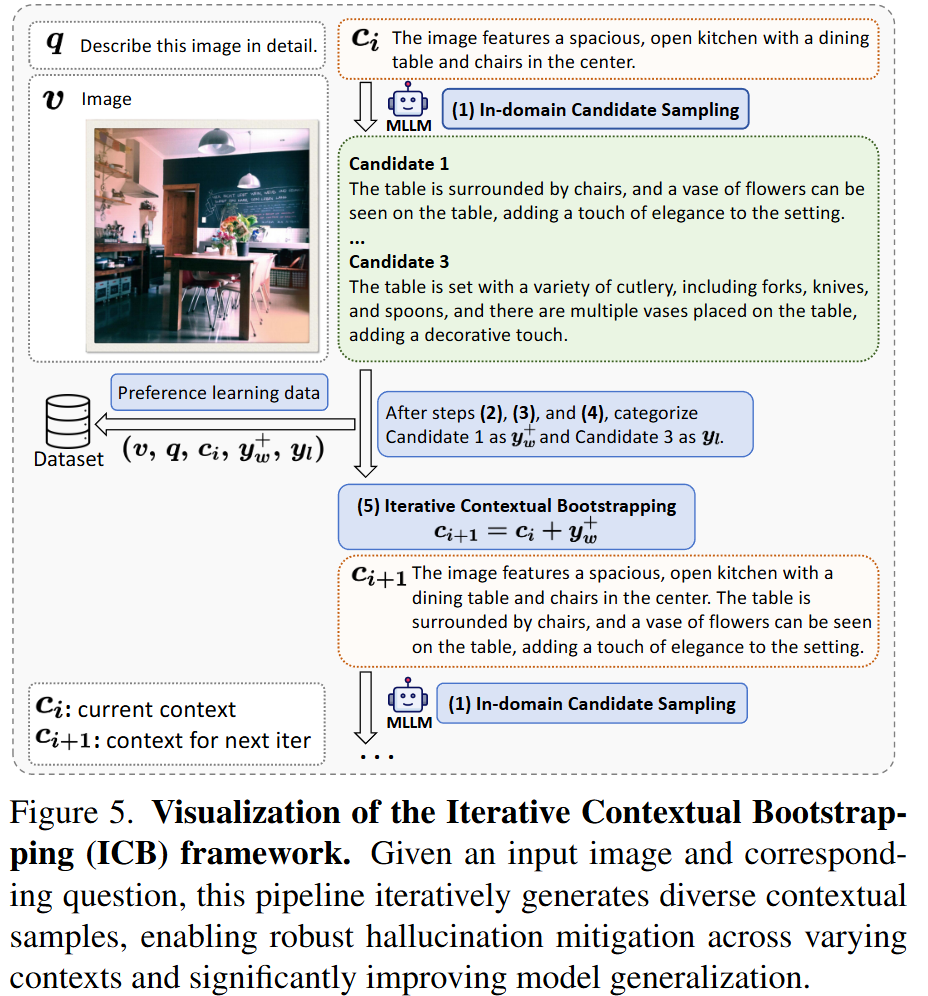

- Iterative contextual bootstrapping for diverse hallucination-free contexts. Our pipeline dynamically grows non-hallucinated contexts and expands coverage across varied scenes, improving robustness across generations.

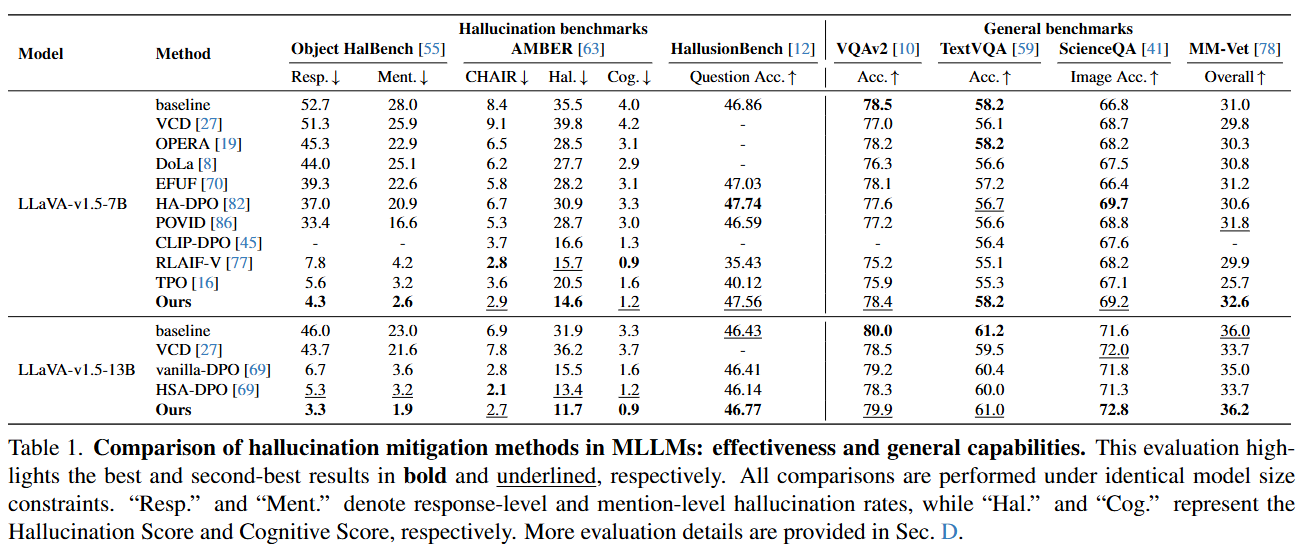

- State-of-the-art results across benchmarks. SENTINEL achieves up to 92% reduction in hallucinations and outperforms prior SOTA methods across Object HalBench, AMBER, and HallusionBench, while maintaining or improving general task performance.

📝 Citation

Section titled “📝 Citation”If you find our model/code/data/paper helpful, please consider citing our papers 📝 and starring us ⭐️!

@inproceedings{peng2025mitigating, title={Mitigating object hallucinations via sentence-level early intervention}, author={Peng, Shangpin and Yang, Senqiao and Jiang, Li and Tian, Zhuotao}, booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision}, pages={635--646}, year={2025}}📧 Contact us

Section titled “📧 Contact us ”If you have any questions, comments, or suggestions, please do not hesitate to submit an issue or PR to help advance research in this area.